The DSLR Will Likely Die: Are Mirrorless the Future of Big Standalone Cameras?

![]()

People often ask me, given the improvement and ubiquity of cell phones, whether DSLRs survive. This actually entails two slightly different questions: will standalone large-ish cameras survive, and will the particular reflex design (the R in DSLR) survive? I am cautiously optimistic about the former and very pessimistic about the latter. In this piece, I will discuss DSLR vs. mirrorless.

Historical Perspective

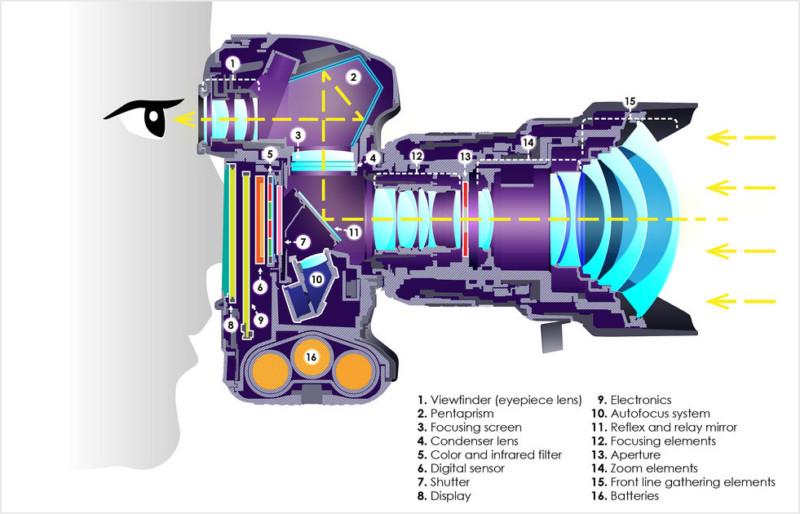

Let’s see why I think the reflex design is doomed, even though it has dominated serious photography for decades. DSLR means digital single lens reflex. The term Reflex comes from reflection and means that the photographer sees an optical image through the viewfinder thanks to a mirror placed at 45 degrees in front of the sensor.

The mirror needs to be flipped up when taking a photograph, which, together with the shutter, is the source of the typical SLR noise. In contrast, in rangefinder or twin-lens reflex cameras (those old-looking cameras with two lenses), the photographer sees an image from an offset viewpoint, which can result in parallax error where the captured image is not exactly what was expected.

Historically, the reflex design has proven superior for two main reasons. First, the photographer sees exactly the image that will be taken “through the lens.” This in turns makes it possible to have a rich set of interchangeable lenses. In contrast, it is harder to change a lens on a rangefinder or twin-lens reflex because you also need to change the viewfinder lens or have marks to visualize the field of view of different lenses.

Second, and this came much later, the SLR design enables superior autofocus thanks to a secondary optical path through parts of the mirror to specialized AF sensors. These sensors essentially perform stereo vision between viewpoints at the edges of a lens for a discrete set of AF points on the image plane. This is often called phase-based autofocus, although stereo would be clearer in my opinion.

Video and digital cameras have fundamentally changed the photography landscape by enabling another type of “through-the-lens” viewing, where the sensor used to capture the final image can also be used for preview, albeit with an electronic screen and not directly optically. Originally, though, this still came at the cost of inferior autofocus performance because there is no space to route light towards phase-based sensors. As a result, non-reflex digital cameras first used slow contrast autofocus.

In a nutshell, they had to sweep through multiple possible distance settings to find the sharpest one (highest contrast), whereas phase-based autofocus could directly compute the correct distance in one step. Note that when DSLRs are used for video, however, they must keep the mirror up. This means that they are back to the same constraints as other cameras, and would originally use slow contrast autofocus.

A breakthrough occurred when camera manufacturers modified cameras to perform phase-based (or stereo) autofocus directly using the main sensor. The idea is to split some or all pixels into two sub-pixels that capture light coming from only half of the lens. This means that they now can perform stereo between images taken roughly from the center of each half of the lens aperture. This explains the dramatic improvement in autofocus ability for non-DSLR cameras such as the various mirrorless systems (Sony, Olympus.) This technology also gets integrated into some DSLRs, in particular Canon’s dual pixels, because it is needed for video shooting.

For computational photography nerds, this is very similar to a light field camera, with just two subpixels. DSLR autofocus usually still has an edge because the dedicated sensor has better performance, in particular in low light, but the advantage is getting smaller and smaller.

Sony was the first to challenge the DSLR dominance at the high-end with its widely applauded a7 series, and Canon and Nikon have recently released full-frame mirrorless cameras. It is noticeable that, in both cases, these camera systems target high-end users, with their full-frame sensors and expensive lenses. This has everyone on the photography internet wondering if the DSLR is doomed. My personal prediction is yes, although this will probably take some time. After all, Nikon still sells a film SLR, the F6.

Why the Mirror Has to Go

The DSLR has to die because the mirror is too high a cost for its diminishing benefits. The mirror adds to the complexity of the camera and its manufacturing, it makes the camera bigger, and it introduces challenges for lens design. And its advantages don’t extend to video, which is an increasingly important use case.

The mirror and phase-based autofocus system make camera design more complex, with additional optical and mechanical challenges and need for precise calibration between the various parts. It also increases the risk of mechanical failure (although the shutter mechanism is a primary offender, which hopefully will eventually be resolved by good global electronic shutters). The manufacturing of a mirrorless camera is much easier to automate because it has fewer moving parts and less calibration. And obviously, the mirror takes space, which makes the body larger.

Furthermore, the mirror in DSLR severely constrains the design of lenses, by preventing lens elements to be placed near the sensor. This is particularly unfortunate for wide angle and large-aperture lenses. Both Canon and Nikon emphasize the extra flexibility and have focused their lens offering on designs that would be hard to achieve given the constraints of SLRs. Canon has a 28-70mm f/2, whereas the largest aperture you can have on a 24-70 for DSLR is f/2.8. Nikon exhibits sharpness (MTF) in its new 35mm that is significantly improved compared to the SLR equivalent.

Finally, the mirrorless design makes it easier to include image analysis of the image from the sensor for, e.g. face detection or eye detection to drive autofocus and exposure before the picture is taken. In contrast, with an SLR, the mirror occludes the sensor and we don’t have access to the image while focusing. Some manufacturers such as Nikon add a secondary sensor to perform face detection when the mirror is down, but this further complicates the design and offers only limited resolution for analysis.

In the end, DSLRs still have slightly more pleasant viewfinders, but electronic viewfinders are getting better and better and are needed for video anyway. DSLRs have slightly better autofocus but the gap is closing and, again, doesn’t apply to video (and face or eye detection or harder.) In turn, mirrorless systems are smaller, simpler, easier to manufacture, probably more durable, yield more flexible optical design, and offer more opportunity for AI and image analysis before shooting.

Even for still photography, the mirrorless design is increasingly advantageous. In the case of video, the DSLR loses all its advantages because the mirror needs to stay up during capture.

There may also be a market incentive for camera manufacturers to encourage the switch to mirrorless. The market for DSLRs has plateaued as most potential customers already have a DSLR and image quality is not improving fast anymore, particularly because sensors are getting close to physical limits. Mirrorless could be an argument for convincing people to upgrade.

I don’t know how long it is going to take. Sony became a serious player with the a7R II, which was released in 2015, and it still doesn’t have a full ecosystem of lenses (no fisheye, limited telephoto options). Nikon and Canon only have a few lenses each. As some observers have pointed out, the next Olympics will give us some hint, since this is often when manufacturers release their flagship camera. But I would be surprised if, ten years from now, DSLR accounted for a serious share of camera sales.

About the author: Frédo Durand is a professor of Electrical Engineering and Computer Science at MIT. The opinions expressed in this article are solely those of the author. He works both on synthetic image generation and computational photography, where new algorithms afford powerful image enhancement and the design of imaging system that can record richer information about a scene. His research interests span most aspects of picture generation and creation, with emphasis on mathematical analysis, signal processing, and inspiration from perceptual sciences. You can find more of his work and photos on his website. This article was also published here.