How Autofocus Works in Photography

![]()

When it was first introduced, many people sharply believed that autofocus would never have a clear place in the photography industry, puns intended. Why have a machine guess where to focus when you can just turn the focusing ring yourself? Today, autofocus systems are among the primary selling points for new camera and lens technology.

Active and Passive Autofocus Systems

If you find the focusing ring on your lens, you’ll probably see distances (in meters and/or feet) written along the circumference of the ring. These are the possible distances between your sensor and the point of focus, which is usually your subject. With this logic, it makes sense to think that autofocus systems would measure the distance to your subject and automatically turn the focusing ring to whatever focusing distance makes sense. This is the idea behind active autofocus (AF).

Active AF requires the camera to send out signals toward the subject, such as light or radio waves, and then receive distance data when that signal bounces back to the camera. This works well in dark environments because it doesn’t rely on the existing light levels to function. However, it requires the path from the camera to the subject to be clear, and it doesn’t work very well with moving subjects. There is also a limit to how far the signal can travel and still come back to the camera, so active AF doesn’t work well with subjects that are far away. Active AF is nearly extinct in modern cameras because of its limitations.

Passive AF is the primary autofocusing system used in modern cameras. Instead of sending out a signal to the surrounding environment, it works by only analyzing data coming into the camera. It uses two primary methods, which will be discussed next.

Passive AF: Phase Detection AF

This autofocus method is fast and fairly accurate, and it’s present in most modern professional cameras. It relies on principles that were widely implemented in rangefinder cameras, with changes to adapt to the digital age.

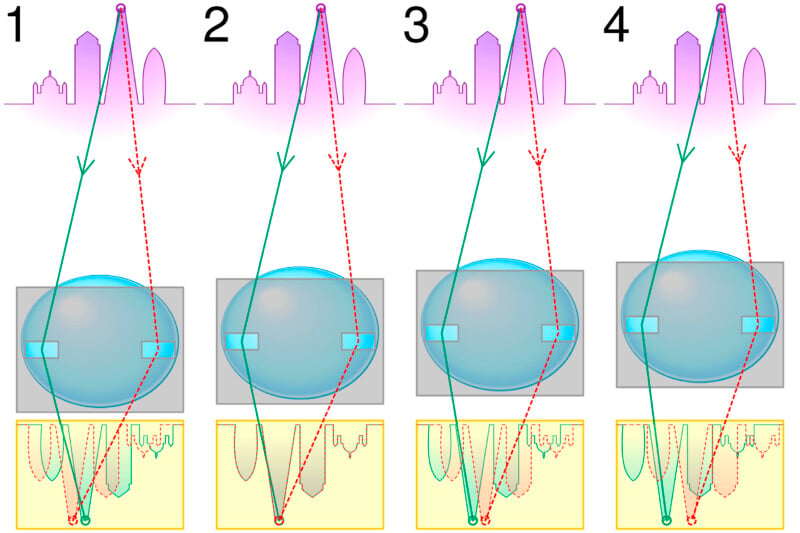

In DSLRs, a mirror reflects incoming light toward the viewfinder so that the photographer can see what they’re photographing. This mirror is slightly transparent, and some light can get through to a second mirror. The second mirror reflects the light down to autofocus sensors that usually sit near the bottom of a DSLR. These sensors have microlenses above them that split the light into two images.

Each DSLR focus point has sensors to gauge the distance, or phase difference, between these two images. To obtain a sharp image, the system adjusts focus until there is no phase difference between the split images, resulting in an image that is focused on the desired area. This is a quick and accurate process because the camera can determine how much and in which direction to turn the focusing ring based on the phase difference of the incoming light.

One downside to phase detection AF is that it involves hardware that needs to be calibrated. If the hardware falls out of calibration, there can be consistent issues with autofocus accuracy.

This hardware may include the camera’s mirrors, autofocusing sensors, and lenses.

Mirrorless cameras lack mirrors, hence their name. Instead of using mirrors to bounce light to autofocus sensors like DSLR cameras, mirrorless cameras use pixels on their imaging sensors for phase detection. Some cameras have separate pixels for phase detection and imaging, while others use all pixels for both imaging and autofocus data (Canon’s Dual Pixel AF is the most well-known example of this). Some mirrorless cameras also gather contrast-detection AF data, which will be discussed next.

Passive AF: Contrast Detection AF

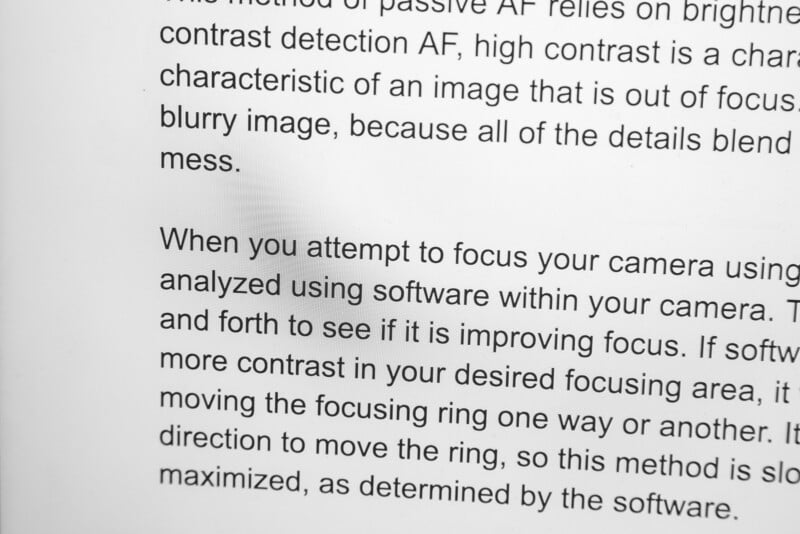

This method of passive AF relies on brightness and color in different areas of an image. With contrast detection AF, high contrast is a characteristic of a sharp image, while low contrast is a characteristic of an image that is out of focus. The term “muddy” is often used to describe a blurry image, because all of the details blend together in an indistinguishable, low-contrast mess.

When you attempt to focus your camera using autofocus, data from the autofocus point(s) is analyzed using software within your camera. The autofocus system is constantly moving back and forth to see if it is improving focus. If software in your camera determines that there can be more contrast in your desired focusing area, it will attempt to make the image sharper by moving the focusing ring one way or another. It doesn’t know exactly how far or in which direction to move the ring, so this method is slower. The image is focused when contrast is maximized, as determined by the software.

This method is more accurate when focusing on still subjects because it doesn’t rely on calibrated mechanics to work correctly. However, in scenes with low contrast, it has a very hard time determining where the areas of high and low contrast are. Most DSLRs use this method when shooting in Live View because the mirror mechanism is not in a position to allow phase detection AF.

Passive AF: Hybrid AF

Some cameras make use of both phase detection and contrast detection AF systems since there are some advantages to both in different scenarios. They may switch between the methods depending on the scenario or shooting mode, or they may use both at the same time. Ultimately, both methods are ways to gather focusing data about the scene, and more data will generally lead to a more efficient autofocusing process.

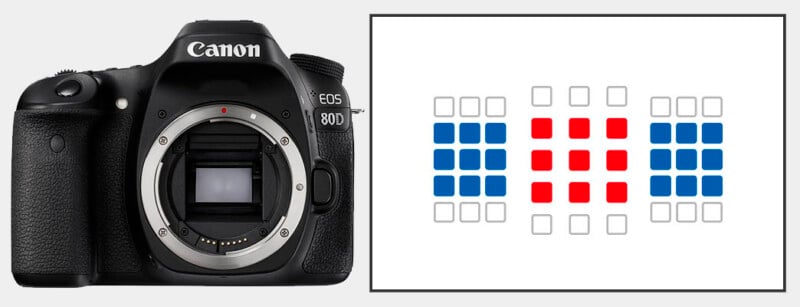

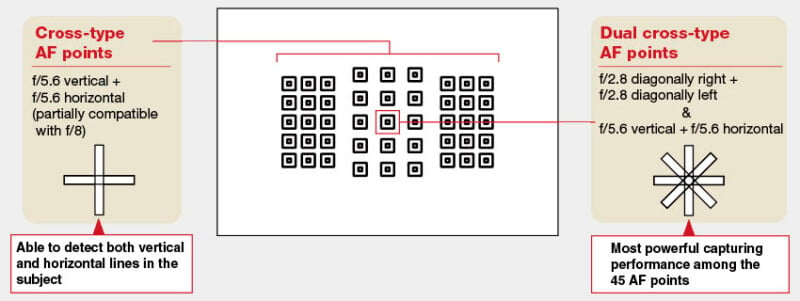

Focus Points

Focus points in phase-detect autofocus systems are different points in an image where your camera will attempt to achieve focus. Different cameras tend to have different numbers and types of focus points, and higher-end cameras tend to have higher-end focus point options. Focus points are usually used in different arrays, such as spot, single-point, zone, and more. Your camera’s manual will tell you more about different focus point options.

The three main types of focus points are vertical, horizontal, and cross-type. Vertical and horizontal focus points detect contrast and generate autofocus data in vertical and horizontal directions only, while cross-type focus points work in both directions. Cross-type focus points are more accurate and versatile, but they tend to be more expensive than the other types.

Most mirrorless focus points are one-dimensional, partially because they tend to have focus points integrated throughout the entire image sensor. There can easily be many more focus points in a mirrorless camera than in a DSLR, so there is less of a need for cross-type focus points. There are other, more technical reasons that mirrorless autofocusing technology limits the type of autofocus points used, but they are a bit beyond the scope of this article.

Focusing Modes

There are three main autofocus modes that most professional cameras offer. Each can be used in different scenarios, and you’ll get the most out of your camera by switching depending on your situation.

1. Single (AF-S or One-Shot AF): This allows your camera to lock focus when you press the focus button. If you or your subject move, focus won’t be reacquired by the camera automatically. This is best for stable subjects, such as landscapes or architecture.

2. Continuous (AF-C or AI Servo): This is best for moving subjects, and the camera will attempt to track the subject as it moves throughout the frame. This is best for wildlife, portraits of moving people, and sports photography.

3. Hybrid/Automatic (AF-A or AI Focus AF): This basically tells the camera to switch between Single and Continuous modes depending on whether it senses motion or not. This is best for situations where you know you’ll have both stationary and moving subjects and won’t have time to switch between modes on your own. Otherwise, this mode isn’t always accurate and you can get better results by setting your autofocus mode yourself.

The Future of Autofocus

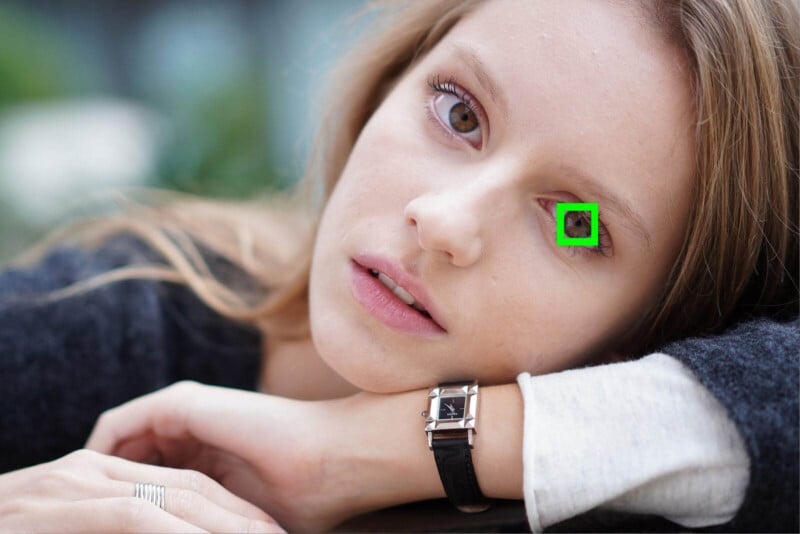

As mentioned briefly above, manufacturers are starting to incorporate AI and machine learning into their autofocus systems. It’s likely that the actual mechanisms won’t change a ton in the next few years, but we’ll see revolutionary changes in the intelligence of the software that works with the autofocus technology.

Many new autofocus systems are already pulling from databases to learn about different focusing scenarios, such as weddings, different sports with helmets and other gear, and even specific people. Autofocus systems have expanded from being able to detect and focus on human eyes to animal eyes and animal subjects.

Additionally, improvements to systems such as eye-control autofocus will help further eliminate the camera’s guesswork. Is it possible that we’ll eventually have cameras that always know exactly what to focus on? It’s hard to say, but we’re getting closer and closer to near-perfect autofocus systems with every new development.

Conclusion

Autofocus modes, terminology, and methods can seem confusing in the beginning. However, they are some of the most important features to understand in your camera. There’s a lot of innovation and technology that is going into developing even better autofocus technology, and it’s all building upon the methods explained here that work well in cameras today. The next time you get a little (or a lot of) help from autofocus, think about all of the extraordinary processes at work so that your camera can make an educated decision on how to help make your image sharp.

Image credits: Header photos from Depositphotos